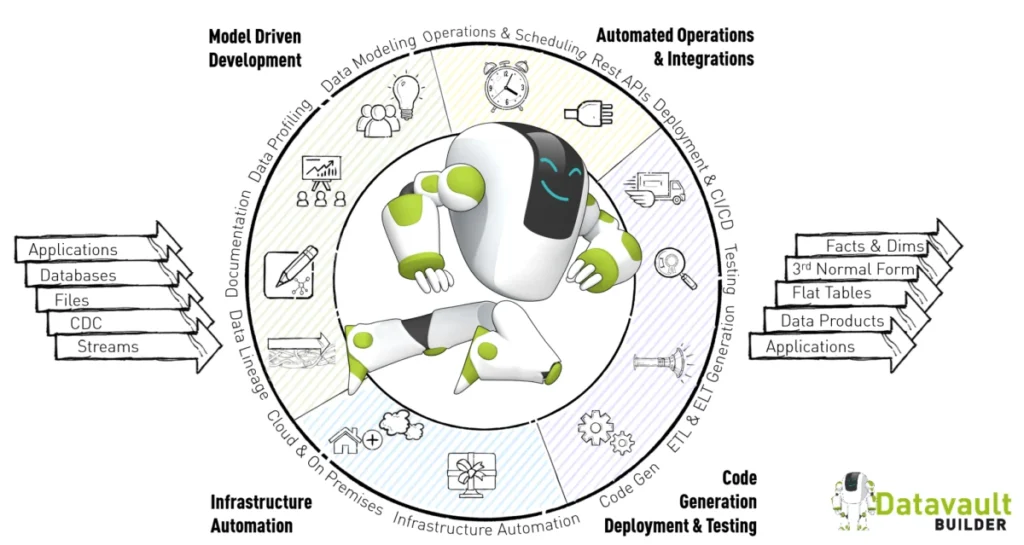

Who need streamlined ELT and modeling workflows without endless custom coding with automated documentation

Data architects designing scalable, cloud-native Lakehouse solutions that are future-proof

BI teams in enterprises looking for faster, cleaner access to analytics-ready data on Databricks

Data scientists who need reliable, good quality data for trusted insights.

AI innovators who want to build on trusted, high-quality data in platform — to have production ready, governed, maintained GenAI and AI use cases delivering ROI

Project leads who aim to de-risk projects and deliver results faster — with automated and governed workflows, full traceability on delivered work

It’s confidence of the business users in the new data infrastructure and trustworthiness of data where DVB stepped in.

The documentation aspect of itis a massive bonus.

Being able to click into a business rule and just say: this is how this specific thing works from source to target. The way business rules are kept really atomic and simple has supported us in keeping people’s confidence high while building the new DWH.

Kristofer Walker

Group IM&T Business Intelligence Manager

Datavault Builder integrates directly with Databricks as the target database. All Data Vault models and ELT pipelines are generated natively in Databricks – no middleware or extra layers required.

No. Datavault Builder runs on top of your Databricks environment. It generates code that executes directly in Databricks, so you can keep your current setup.

Yes. Datavault Builder handles relational data, files, JSON, IoT streams and logs. Everything can be managed and historized in one Lakehouse architecture.

You save time and reduce risk: instead of hand-coding, you get automation, built-in governance, lineage, documentation, and ready-to-use standards like bi-temporality.

Data engineers, data architects, and BI teams in enterprises that want to accelerate their Databricks projects without losing governance or flexibility.